Table of Contents

In a recent conversation I had with DoneMaker, I unpacked why Social Media is not just noisy and distracting, it’s a regulatory, ethical, and operational hazard for businesses and professionals. If you depend on Social Media to reach customers, run promotions, collect leads, or train AI models, this guide is written for you. Below I walk through the practical problems I encountered when I left Instagram, the legal and ethical issues you need to know, step-by-step actions you can take, and the safeguards your business should put in place right now.

1. Why leaving Social Media is harder than you think

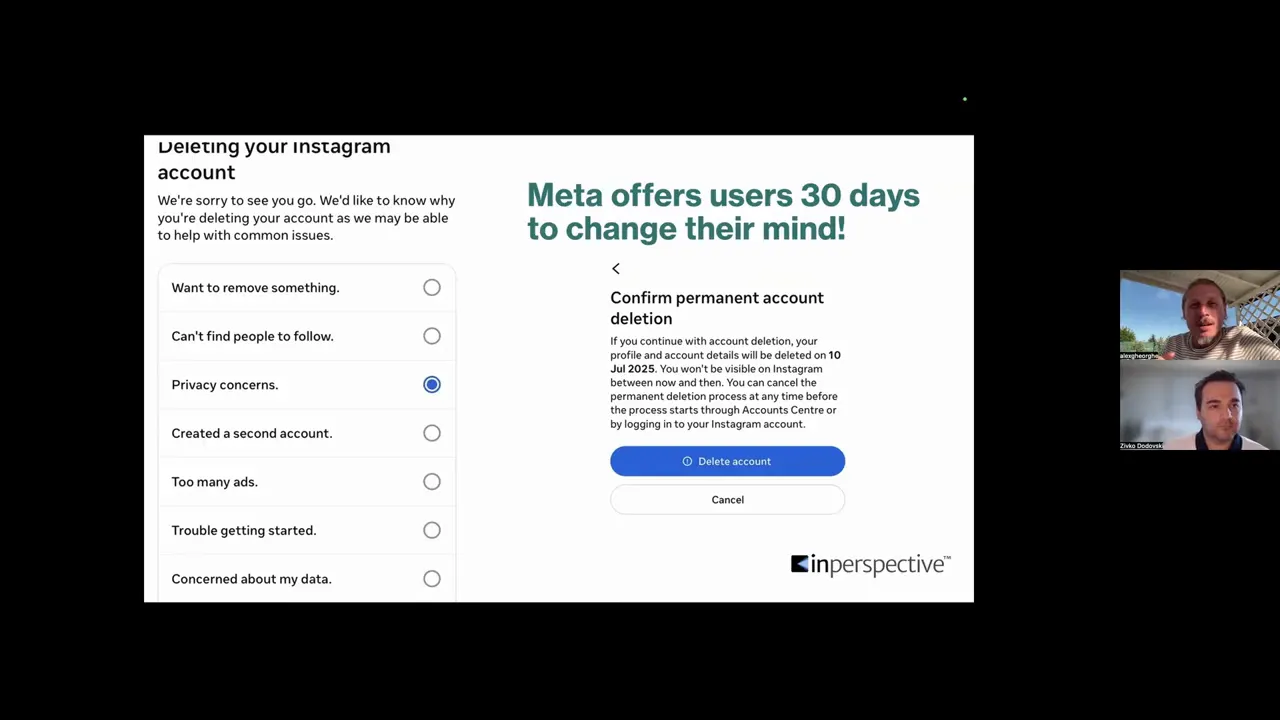

When you decide to leave a platform like Instagram, it’s not as simple as tapping “delete.” The platforms are built to keep you there, because your attention equals ad revenue. You’ll encounter multiple friction points: repeated prompts to stay, soft-deactivation options, 30-day grace periods, and lingering backend retention that can stretch to 90 days. All of these are designed to make you reconsider.

If you’re thinking, “That’s fine, I’ll just delete my account and be done,” you’ll likely see several screens asking why you want to leave, offering alternatives like “take a break” or “temporary deactivation,” and even presenting you with features designed to persuade you to stay. You can remove the app from your phone, which helps, but the platforms still keep copies of your data for a while. From a privacy standpoint, you should treat that retention period as a risk window: your data may still exist even after you hit “delete.”

2. The blunt truth: Social Media platforms are ad agencies

If you want to understand why Social Media behaves the way it does, you need to understand the business model. Platforms like Facebook and Instagram simplify their entire value proposition to one sentence: “We sell ads.” That’s not an opinion — that’s how they present themselves when confronted by regulators. When your platform’s commercial goal is maximizing ad impressions and tailoring ads to individual behavior, privacy is often an afterthought or a negotiable feature.

You should expect features, algorithms, and interfaces to be built primarily to increase engagement, especially engagement that helps advertisers. For your business, that means the same platform that helps you reach customers is also collecting data about how those customers behave, which may later be used in ways you don’t control.

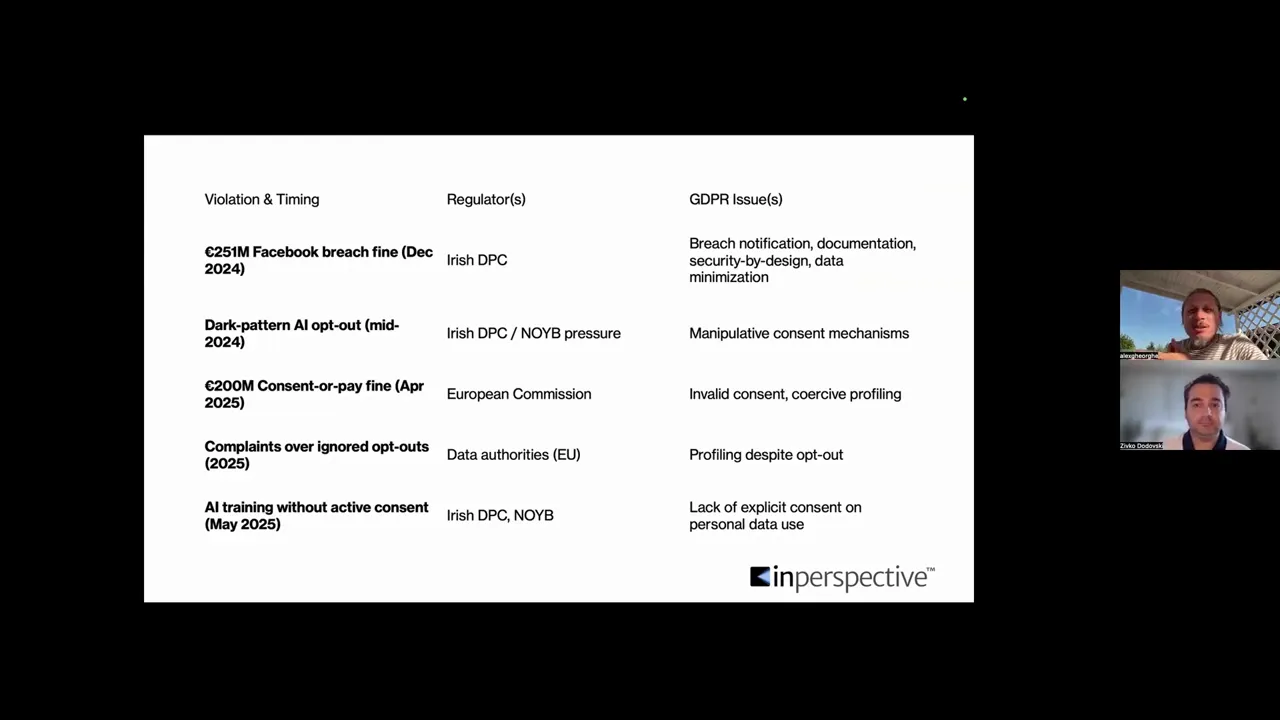

3. Real harms: why regulators and courts are paying attention

When your presence on Social Media involves minors, mental health, or promotional campaigns that collect personal data, you’re in a sensitive zone. Recent lawsuits and regulatory actions have focused on internal documents showing platforms knew about harms, for example, studies indicating that certain features worsen body image issues in young people.

If you use Social Media to target or attract young people, or if you run campaigns that collect personal data from participants, you must assume regulators and plaintiffs will scrutinize your practices. The platforms themselves have faced multi-district litigation with thousands of plaintiffs; your business can be affected either directly (through data exposure) or indirectly (through stricter rules that limit how you can operate).

4. Dark patterns, “consent or pay,” and the new privacy marketplace

One of the more disturbing trends is the normalization of dark patterns and pay-for-privacy mechanics. Some Social Media companies have offered users the option to pay a monthly fee to avoid personalized ads, effectively “buying” the platform’s privacy. This raises two problems for you:

- Equity and fairness: Privacy becomes a commodity. If only paying users get reduced profiling, non-paying users, potentially including your customers, remain heavily profiled.

- Regulatory exposure: Regulators see dark patterns and coerced consent (consent-or-pay) as violations in many jurisdictions. If your business flows personal data through a platform using such techniques, your operations can become entangled in enforcement actions.

From a practical perspective, you should always evaluate opt-outs and consent flows. If the platform makes it difficult for users to opt out of profiling, or moves consent into a paywall, treat that as a red flag for doing business there or integrating that platform with your systems.

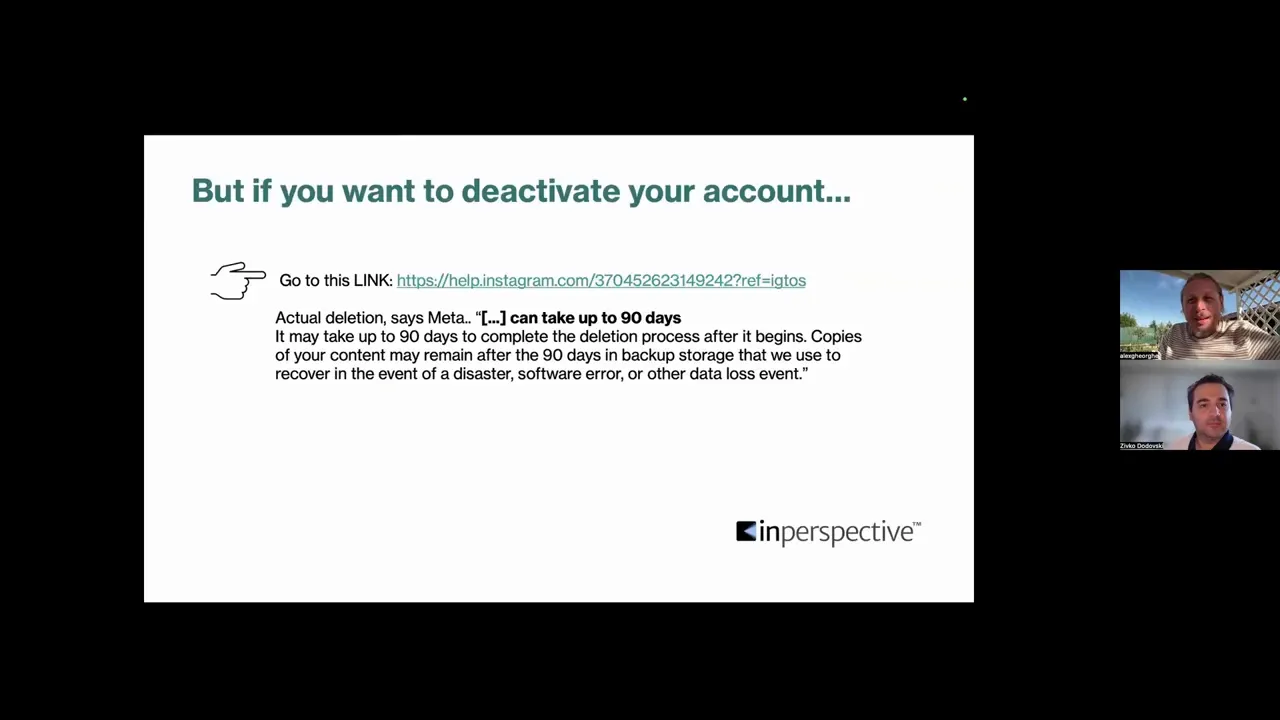

5. The deletion illusion: 30 days, 90 days, and persistent copies

When you press “delete account,” platforms typically give you a 30-day window to change your mind. After that, they claim the account is “permanently deleted,” but you’ll still see a timeframe (commonly up to 90 days) during which they may be finishing deletion processes across backup systems and secondary servers.

From a business risk perspective, you must assume that copies of personal data, analytics, and logs can persist in backups for months. That has consequences:

- If you run a promotion that collects entries on Social Media, those entries may remain on the platform or in backups longer than you think.

- If you rely on a platform for CRM or lead collection, you must plan for the possibility that deleting a contact from the platform doesn’t immediately erase derived datasets you or third-party partners hold.

If retention and correct deletion are important to you, push platforms and vendors for clear contractual commitments, and test deletion processes during vendor due diligence. If you’re a business leader, insist on deletion SLAs in supplier agreements and treat Social Media platforms like any other third-party data processor.

6. Three immediate moves you must make if you use Social Media for business

When you run a business presence on Social Media, do not assume “being there” is the same as acting responsibly. Here are three prioritized actions you should take today:

- Limit scope and purpose: Define exactly why your business is on each Social Media platform. If your goal is to advertise products, keep your activity focused on advertising rather than expanding into unrelated data collection.

- Harden promotional links: If you run contests, giveaways, or use links to collect entries, don’t post open, unsecured forms. Use proper HTTPS endpoints, access controls, and CAPTCHAs; vet third-party vendors and test for scraping.

- Update your terms and privacy notices: Make it explicit why you collect data, where it goes, and how long you keep it. Include a section that explains your Social Media presence and the data flows involved.

If you fail on any of these, you expose your customers to scraping, data leakage, and even ransomware demands to retrieve lists scraped from insecure promotional links. In Europe, paying a ransom to criminals for leaked customer data can compound the legal violations.

7. How to design consent and data flows for Social Media integrations

When you embed Social Media plugins, run social campaigns, or use Social Media for lead capture, you must design data flows with privacy in mind. That means:

- Map data flows end-to-end: know what data is collected, where it goes, and who processes it.

- Use explicit, granular consent: avoid bundling unrelated consents or using pre-checked boxes.

- Limit data retention: keep personal data only as long as the stated purpose requires.

- Protect export channels: if you export leads to CRMs, ensure secure transfer and restrict access within your organization.

These are not “nice to have” items. Under laws like the GDPR, data minimization and purpose limitation are core principles. If you build Social Media processes that ignore them, you increase both compliance risk and operational exposure.

8. AI, Social Media data, and why you must involve privacy early

AI is now the feature everyone plans to add. But when you train AI models using data that originates from Social Media, you enter a complicated legal and ethical area. A few practical rules you should apply:

- Involve privacy people from day one: If you’re planning an AI project, bring your DPO or a privacy consultant into the project kickoff. Getting privacy input late means costly rework, feature cuts, or halting projects.

- Clarify what legislation applies: AI regulations (like the EU’s AI Act) and data privacy laws (like GDPR) operate in parallel. The AI Act may govern model risk levels and transparency, but GDPR governs the personal data you use to train models.

- Limit training data and access: Don’t give developers unlimited access to raw Social Media feeds. Use synthetic, anonymized, or minimized datasets whenever possible and document the legal basis for each dataset.

- Set retention limits: If a model requires personal data during training, specify a retention timeline: keep the raw data only as long as necessary, then delete or anonymize it.

One of the harder facts here is that many large models were trained on scraped data where consent or lawful basis was unclear. As a company building models or integrating third-party AI, you need contractual protections and technical controls to avoid inheriting unknown liabilities.

9. Your Social Media cleanup checklist (practical and runnable)

Use this checklist as a working document to reduce immediate risk associated with Social Media use.

- Inventory: List every Social Media account (company and employee-run) and the data each collects.

- Purpose Statement: Document why you maintain each account and what business outcome it serves.

- Terms & Privacy: Publish updated privacy pages explaining how Social Media interactions are processed.

- Vendor Contracts: For any third-party Social Media tools, add data processing terms and deletion SLAs.

- Promotions: Secure forms and ensure promotional links are behind authenticated pages or secure endpoints.

- Consent Flows: Replace vague or bundled consents with clear, granular options and record consent evidence.

- Retention Policy: Set retention periods for Social Media derived data — don’t keep more than needed.

- Training Data: Prohibit unrestricted use of Social Media data for model training unless legally vetted and documented.

- Incident Plan: Add Social Media data leaks to your incident response plan with clear notification steps.

- Employee Guidance: Train marketers and community managers on data hygiene and privacy-aware posting.

10. What I did when I left Instagram — lessons you can apply

I decided to delete my Instagram account. Here’s what I learned and what you should do if you or staff plan a similar move:

- Remove the app first: Delete the app from your phone to reduce temptation and prevent background collection or notifications.

- Export what you need: If there’s business content you want to keep, download it before deletion — but be mindful of personal data contained in comments and DMs.

- Choose privacy as the deletion reason: When platforms ask why you’re leaving, select the privacy option to create a record of your intent (useful if you later need to escalate).

- Watch the 30/90-day windows: Expect the platform to promise permanent deletion after 30 days but to maintain copies up to 90 days. Treat that as a buffer for follow-up.

- Resist reactivation: The platform will actively try to pull you back through prompts and saved credentials. If you need to stay deleted, change your passwords and remove saved logins.

These steps apply to businesses too: if you decide to abandon a Social Media presence, plan the export, notify customers appropriately, update your privacy policy, and close open promotional links.

FAQ — Your pressing Social Media privacy questions answered

Q: Should my business leave Social Media entirely?

A: Not necessarily. Social Media is a powerful channel for marketing and customer outreach. But you should not treat it as a neutral or risk-free channel. If you stay, do so deliberately: limit scope, secure data flows, update terms, and harden integrations. If the platform’s business model or consent flows violate your policies or legal obligations, consider alternative channels.

Q: Is “pay for privacy” on Social Media legal?

A: Pay-for-privacy raises fairness and consent concerns and has already drawn regulatory scrutiny. In some jurisdictions, offering privacy only to paying customers can be seen as a dark pattern or coercive. Consult legal counsel before adopting similar pricing approaches; avoid tying fundamental privacy rights to payment.

Q: How long will Social Media platforms keep deleted data?

A: The usual public claim is deletion after a short window (e.g., 30 days), but both platforms and backup systems can retain copies for longer (commonly up to 90 days). From a compliance standpoint, assume that backups and derived data can persist unless a vendor contract explicitly defines otherwise.

Q: Can I use Social Media data to train my AI models?

A: Technically yes, but legally and ethically it depends. If the data includes personal data, GDPR or comparable laws apply in many regions. You need a lawful basis, documented consent or anonymization, data minimization, and robust access controls. Involve privacy experts early and document decisions in data processing agreements.

Q: What’s the single best thing I can do today to reduce Social Media privacy risk?

A: Start with mapping: list your accounts, the data each collects, where it flows, and who has access. That map will reveal your biggest vulnerabilities and help you prioritize quick wins like securing contest links, updating privacy notices, and tightening access controls.

Q: Where can I get help with Social Media privacy and AI compliance?

A: Look for a privacy consultant or DPO who understands both data protection law and practical system design. Choose advisors who can help map data flows, draft contractual terms, set retention SLAs, and create training for your marketing teams.

Social Media is not inherently evil — it is a tool with a powerful business model that can conflict with privacy, fairness, and long-term trust. If you want to keep using these platforms, do so intentionally: reduce unnecessary collection, secure the links and forms you publish, involve privacy experts early, and treat AI training data with skepticism unless you can document lawful bases and retention limits.

If you follow the checklist and prioritize these ten areas, you will drastically reduce the probability of privacy incidents, regulatory fines, and reputational damage. And if you decide to step away from a platform entirely, do it with a plan: export what you need, delete carefully, and update your policies to reflect the change.

Use this article as a working reference in your next marketing, legal, or AI project meeting. The risks are real, but with the right processes and mindset, you can continue to reach customers via Social Media while protecting their privacy and your business.

Watch the full podcast here: Why Social Media is a Privacy Nightmare for Businesses